Authenticate to Azure cloud storage

Suppose you have successfully deployed a cluster on CelerData Cloud BYOC, and the cluster-related cloud resources such as your virtual machine (VM) and cloud storage are already located under your Azure account.

This topic introduces how to enable a CelerData cluster to access your self-managed Azure cloud storage such as Azure Blob Storage or Azure Data Lake Storage in the following scenarios:

- Query Hive, Iceberg, Hudi, and Delta Lake tables in Azure Storage.

- Batch load data from Azure Storage.

The following table lists the authentication methods that are supported for various Azure storage services.

| Azure storage service | Authentication method |

|---|---|

| Azure Blob Storage |

|

| Azure Data Lake Storage Gen2 (ADLS Gen2) |

|

| Azure Data Lake Storage Gen1 (ADLS Gen1) |

|

The examples of data loading in this topic use file1.parquet stored in your Azure storage as a sample data file and use table1 which belongs to a database named mydatabase within your CelerData cluster as a sample destination table. Suppose that file1.parquet and table1 have the same schema.

Azure Blob Storage

When you access Blob Storage, you may need to input a file path:

- If your storage account allows access over HTTP, use the wasb protocol and write the file path as

wasb://<container_name>@<storage_account_name>.blob.core.windows.net/<path>/<file_name>. - If your storage account allows access over HTTPS, use the wasbs protocol and write the file path as

wasbs://<container_name>@<storage_account_name>.blob.core.windows.net/<path>/<file_name>.

Shared Key (access key of storage account)

Access keys authenticate your Azure-based CelerData cluster's requests to your storage account. For more information about access keys, see Manage account access keys - Azure Storage.

NOTICE

This authentication method now supports external catalog and Broker Load. Note that for external catalogs, the storage account you use for authentication must be the one used to store the data of your external data source.

Parameters

Configure the following parameters as credentials for accessing your Blob Storage.

| Parameter | Description |

|---|---|

| azure.blob.storage_account | The name of your Blob storage account. |

| azure.blob.shared_key | The shared key (access key) of your Blob storage account. |

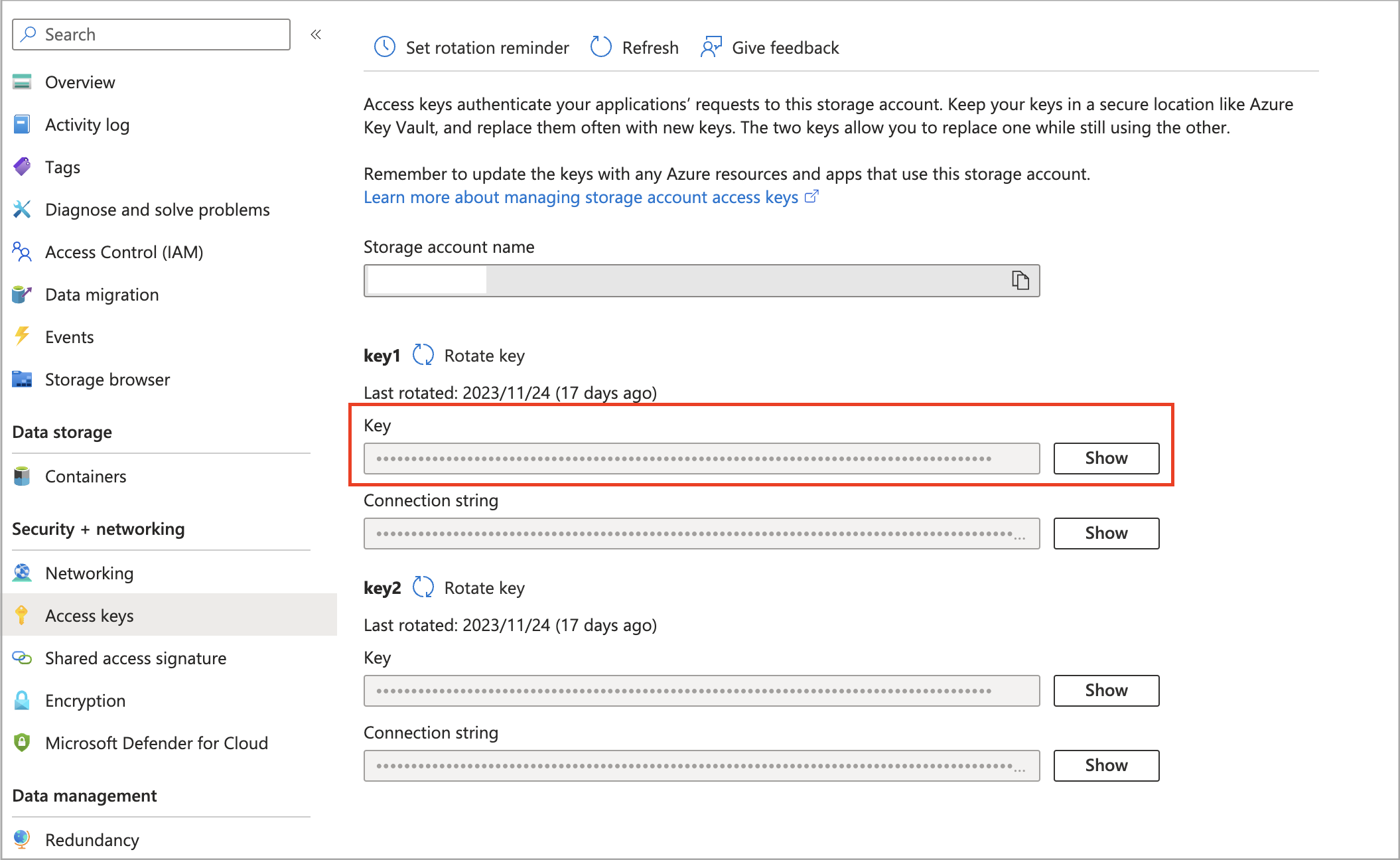

Follow these steps to obtain the shared key (access key) of your Blob storage account:

-

Sign in to the Microsoft Azure portal and navigate to the Storage accounts service page.

-

Find your storage account and click its name.

-

In the left-side navigation pane, choose Access keys.

-

On the page that appears, click Show next to the key you want to use, and then click the copy icon to copy the key.

Examples

External catalog

Create a Hive catalog named hive_catalog_azure:

CREATE EXTERNAL CATALOG hive_catalog_azure

PROPERTIES

(

"type" = "hive",

"hive.metastore.type" = "hive",

"hive.metastore.uris" = "thrift://xx.xx.xx.xx:9083",

"azure.blob.storage_account" = "<storage_account_name>",

"azure.blob.shared_key" = "<storage_account_shared_key>"

);

CelerData now supports Hive catalog, Iceberg catalog, Hudi catalog, and Delta Lake catalog. For the detailed syntax and parameters for creating these external catalogs, see Hive catalog, [Iceberg ../data_source/catalog/iceberg/iceberg_catalog.mdatalog.md), [Hudi ../data_source/catalog/hudi_catalog.mdatalog.md), and Delta Lake catalog.

Broker Load

Create a Broker Load job to load data from the data file file1.parquet to the destination table table1 which belongs to the database named mydatabase within the CelerData cluster:

LOAD LABEL mydatabase.label_blob_key

(

DATA INFILE("wasb[s]://<container_name>@<storage_account_name>.blob.core.windows.net/<path>/file1.parquet")

INTO TABLE table1

FORMAT AS "parquet"

)

WITH BROKER

(

"azure.blob.storage_account" = "<storage_account_name>",

"azure.blob.shared_key" = "<storage_account_shared_key>"

)

PROPERTIES

(

"timeout" = "3600"

);

For the detailed syntax and parameters for creating a Broker Load job, see BROKER LOAD.

For a typical example of loading data from Azure with table creation DDL, see Batch load data from Azure cloud storage.

SAS Token

A shared access signature (SAS) is a URI that grants restricted access to your storage account. For more information about SAS tokens, see Delegate access by using a shared access signature - Azure Storage.

NOTICE

This authentication method now supports external catalog and Broker Load. Note that for external catalogs, the storage account you use for authentication must be the one used to store the data of your external data source.

Parameters

Configure the following parameters as credentials for accessing your Blob Storage.

| Parameter | Description |

|---|---|

| azure.blob.storage_account | The name of your Blob storage account. |

| azure.blob.container | The name of the Blob container that stores your data within your Blob storage account. |

| azure.blob.sas_token | The SAS token that is used to access your Blob storage account. |

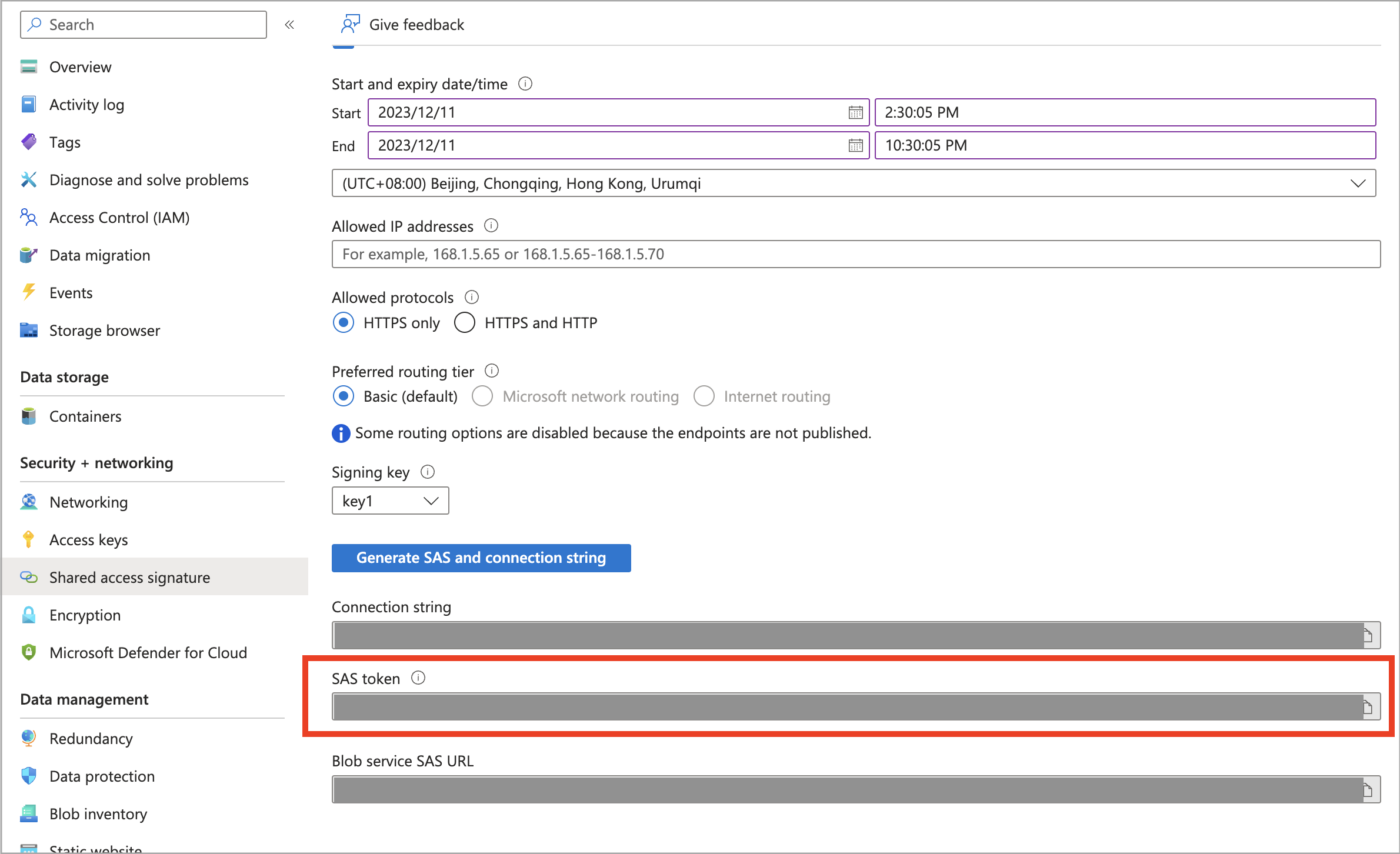

Follow these steps to obtain the SAS token of your Blob storage account:

-

Sign in to the Microsoft Azure portal and navigate to the Storage accounts service page.

-

Find your storage account and click its name.

-

In the left-side navigation pane, choose Shared access signature.

-

On the page that appears, check that you have properly made configurations in the Allowed services, Allowed resource types, and Allowed permissions sections, then select a key from the Signing key drop-down list and click Generate SAS and connection string.

You can click the copy icon to copy the generated SAS token.

Examples

External catalog

Create a Hive catalog named hive_catalog_azure:

CREATE EXTERNAL CATALOG hive_catalog_azure

PROPERTIES

(

"type" = "hive",

"hive.metastore.type" = "hive",

"hive.metastore.uris" = "thrift://xx.xx.xx.xx:9083",

"azure.blob.storage_account" = "<storage_account_name>",

"azure.blob.container" = "<container_name>",

"azure.blob.sas_token" = "<storage_account_SAS_token>"

);

CelerData now supports Hive catalog, Iceberg catalog, Hudi catalog, and Delta Lake catalog. For the detailed syntax and parameters for creating these external catalogs, see Hive catalog, [Iceberg ../data_source/catalog/iceberg/iceberg_catalog.mdatalog.md), [Hudi ../data_source/catalog/hudi_catalog.mdatalog.md), and Delta Lake catalog.

Broker Load

Create a Broker Load job to load data from the data file file1.parquet to the destination table table1 which belongs to the database named mydatabase within the CelerData cluster:

LOAD LABEL mydatabase.label_blob_sas

(

DATA INFILE("wasb[s]://<container_name>@<storage_account_name>.blob.core.windows.net/<path>/file1.parquet")

INTO TABLE table1

FORMAT AS "parquet"

)

WITH BROKER

(

"azure.blob.storage_account" = "<storage_account_name>",

"azure.blob.container" = "<container_name>",

"azure.blob.sas_token" = "<storage_account_SAS_token>"

)

PROPERTIES

(

"timeout" = "3600"

);

For the detailed syntax and parameters for creating a Broker Load job, see BROKER LOAD.

For a typical example of loading data from Azure with table creation DDL, see Batch load data from Azure cloud storage.

Azure Data Lake Storage Gen2

When you access ADLS Gen2, you may need to input a file path:

- If your storage account allows access over HTTP, use the abfs protocol and write the file path as

abfs://<container_name>@<storage_account_name>.dfs.core.windows.net/<path>/<file_name>. - If your storage account allows access over HTTPS, use the abfss protocol and write the file path as

abfss://<container_name>@<storage_account_name>.dfs.core.windows.net/<path>/<file_name>.

Managed Identity

User-assigned managed identities enable Azure resources to authenticate to cloud services (for example, Azure Key Vault) without storing credentials in code. Each managed identity is created as a standalone Azure resource, and has its own lifecycle. A single resource like a VM can utilize multiple user-assigned managed identities. Similarly, a single user-assigned managed identity can be shared across multiple resources (for example, multiple VMs).

Preparation

Before you can use the Managed Identity authentication method, you must find the managed identity that is referenced in the data credential of the CelerData cluster. Also, if you want the CelerData cluster to access more data in your storage account, you need to check that the managed identity is assigned the required read and write permissions on your storage account.

NOTICE

This authentication method now supports external catalog and Broker Load. Note that for external catalogs, the storage account you use for authentication must be the one used to store the data of your external data source.

Follow these steps:

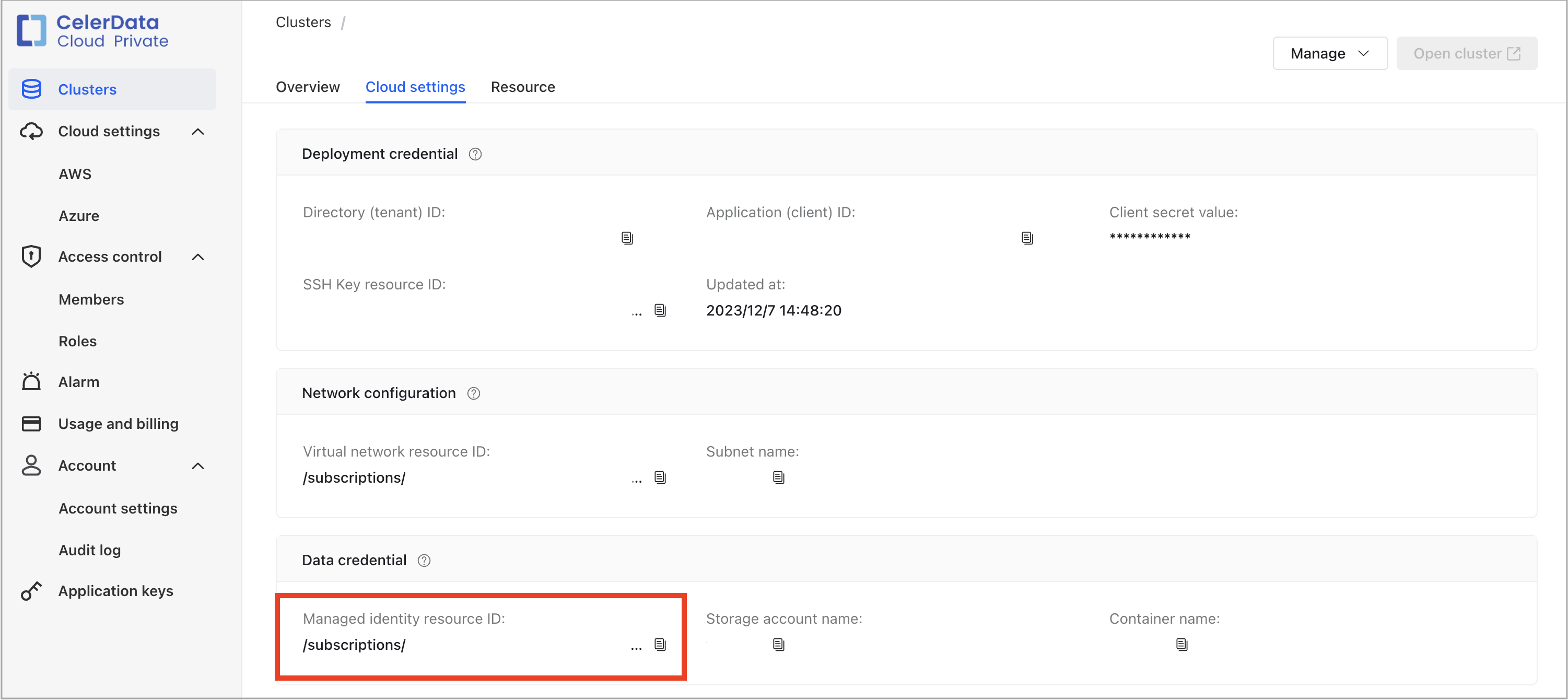

-

Sign in to the CelerData Cloud BYOC console.

-

On the Clusters page, find your CelerData cluster and click it.

-

On the Cloud settings tab, navigate to the Data credential section where you can find the Managed identity resource ID. The last element in the Managed identity resource ID is the name of the managed identity associated with the CelerData cluster. For example, if the Managed identity resource ID is /subscriptions/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx/resourceGroups/celerdata-20240426-resource-group-001/providers/Microsoft.ManagedIdentity/userAssignedIdentities/celerdata-20240426-managed-identity, then the name of the managed identity is celerdata-20240426-managed-identity.

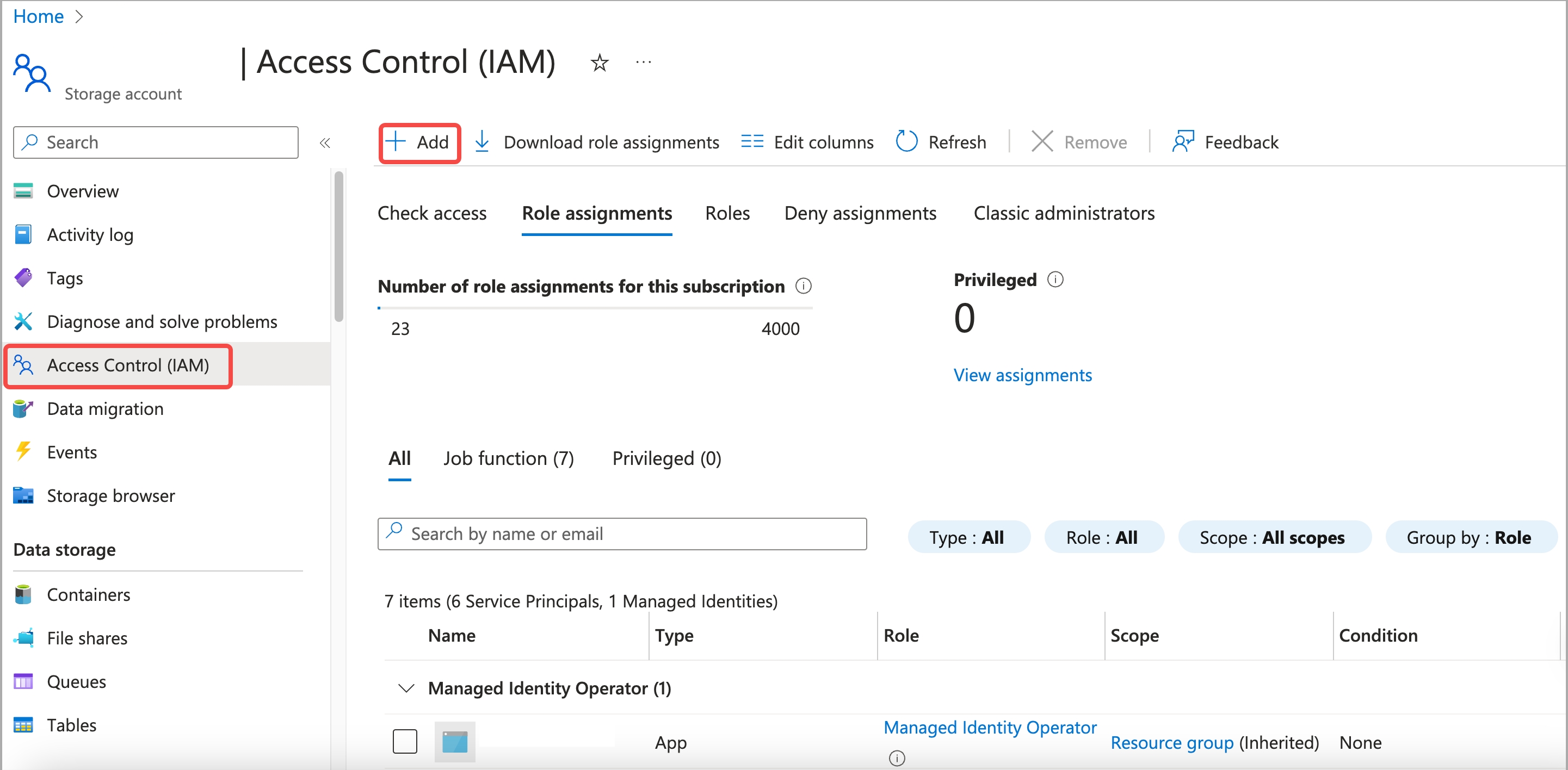

-

Sign in to the Microsoft Azure portal and navigate to the Storage accounts service page.

-

Find your storage account and click its name.

-

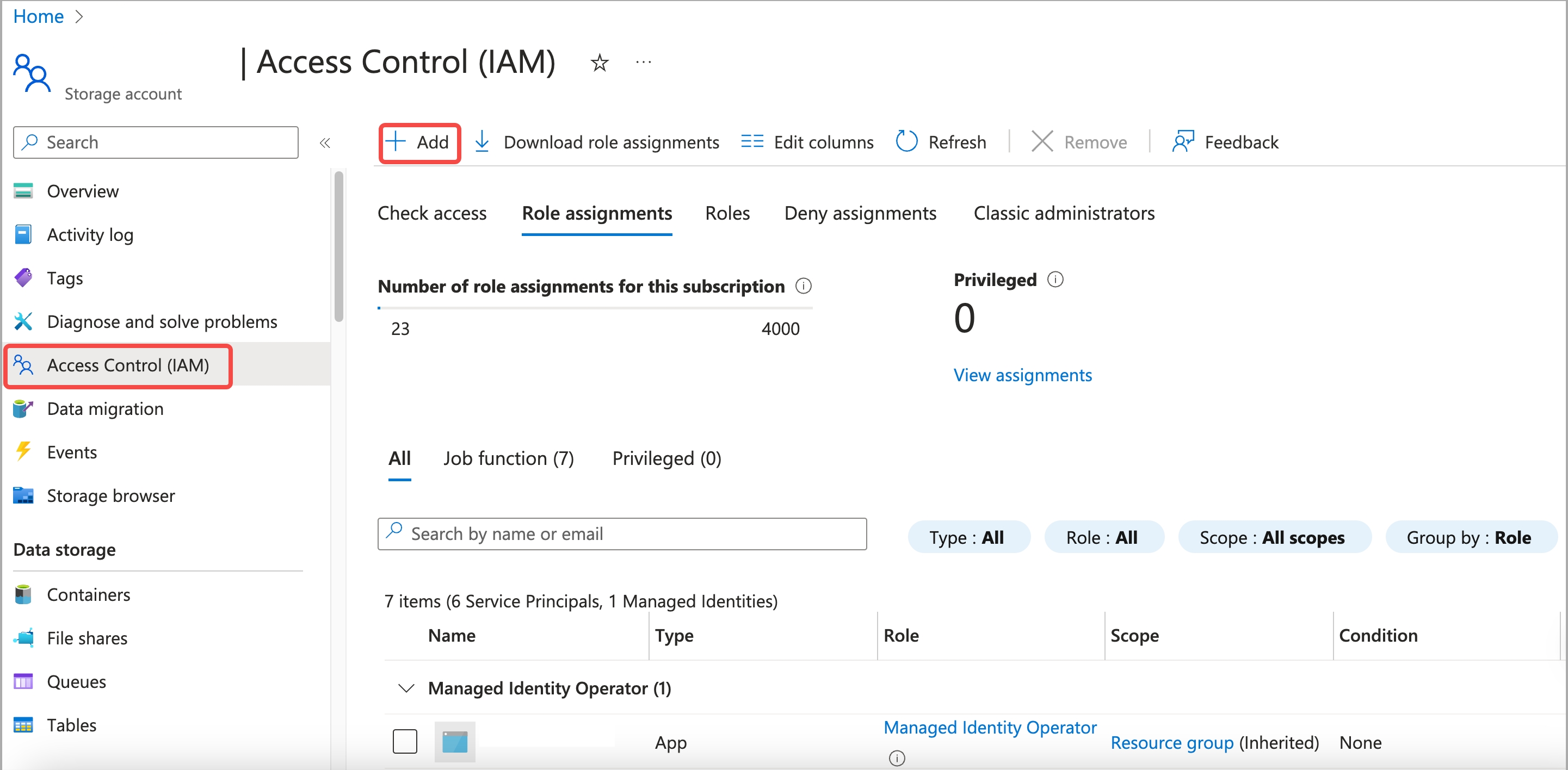

In the left-side navigation pane, choose Access control (IAM).

-

On the Role assignments tab of the page that appears, check the existing role assignments.

-

If the required read and write permissions on the storage account have not been assigned to the managed identity, click Add and choose Add role assignment. Then, add a proper role assignment:

a. On the Job function roles tab of the Add role assignment page, select a proper role assignment (for example, Storage Blob Data Owner, which allows for full access to the containers and data within your storage account) and click Next.

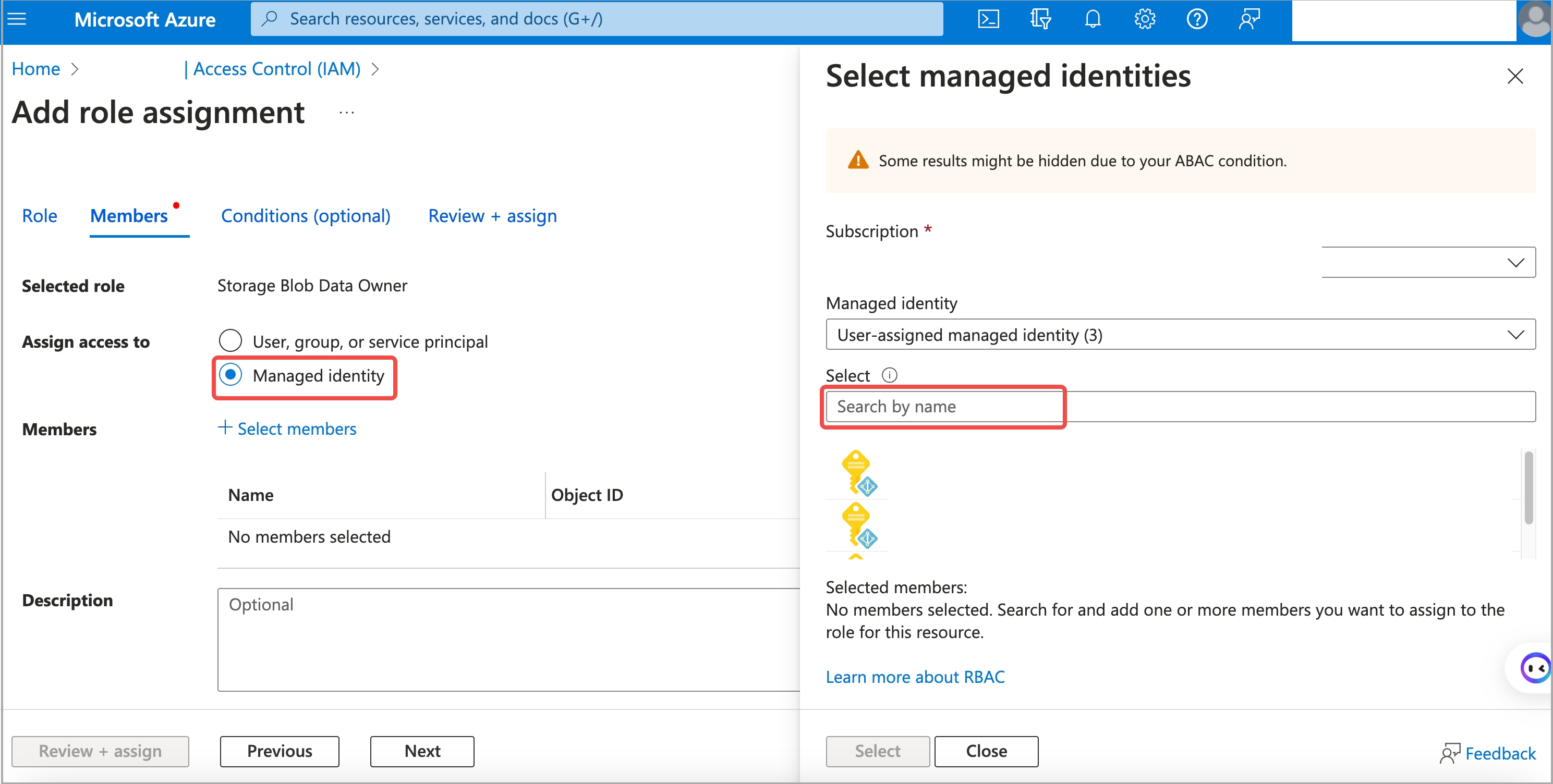

b. On the Members tab, select Managed identity for Assign access to.

c. For Members, click Select members. In the right-side pane that appears, select your Microsoft subscription from the Subscription drop-down list, select User Assigned Managed Identity from the Managed identity drop-down list, search for and select the managed identity from the Select section, and then click Select.

d. Click Review + assign. Then, on the Review + assign tab, review the information you have filled, and click Review + assign to add the role assignment.

Parameters

Configure the following parameters as credentials for accessing your ADLS Gen2.

| Parameter | Description |

|---|---|

| azure.adls2.oauth2_use_managed_identity | Specifies whether to enable the Managed Identity authentication method. Set the value to true. |

| azure.adls2.oauth2_tenant_id | The ID of the tenant of your ADLS Gen2 storage account. |

| azure.adls2.oauth2_client_id | The client ID of the managed identity that is referenced in the data credential of the destination CelerData cluster. |

Follow these steps to obtain the ID of the tenant of your ADLS Gen2 storage account:

- Sign in to the Microsoft Azure portal and navigate to the Tenant properties setting page.

- Find Tenant ID and click the copy icon to copy it.

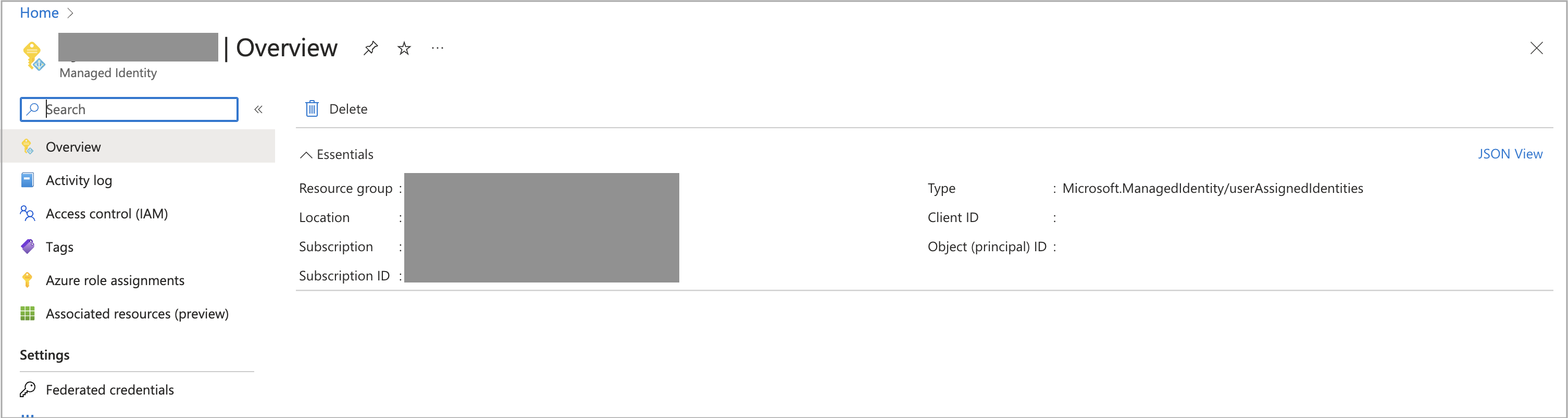

Follow these steps to obtain the client ID of the managed identity:

-

Sign in to the Microsoft Azure portal and navigate to the Managed Identities service page.

-

Find the managed identity based on its name which you have obtained in the previous step, and click it.

-

On the Overview page that is displayed, find the Client ID and click the copy icon to copy it.

Examples

External catalog

Create a Hive catalog named hive_catalog_azure:

CREATE EXTERNAL CATALOG hive_catalog_azure

PROPERTIES

(

"type" = "hive",

"hive.metastore.type" = "hive",

"hive.metastore.uris" = "thrift://xx.xx.xx.xx:9083",

"azure.adls2.oauth2_use_managed_identity" = "true",

"azure.adls2.oauth2_tenant_id" = "<service_principal_tenant_id>",

"azure.adls2.oauth2_client_id" = "<service_client_id>"

);

CelerData now supports Hive catalog, Iceberg catalog, Hudi catalog, and Delta Lake catalog. For the detailed syntax and parameters for creating these external catalogs, see Hive catalog, [Iceberg ../data_source/catalog/iceberg/iceberg_catalog.mdatalog.md), [Hudi ../data_source/catalog/hudi_catalog.mdatalog.md), and Delta Lake catalog.

Broker Load

Create a Broker Load job to load data from the data file file1.parquet to the destination table table1 which belongs to the database named mydatabase within the CelerData cluster:

LOAD LABEL mydatabase.label_gen2_identity

(

DATA INFILE("abfs[s]://<container_name>@<storage_account_name>.dfs.core.windows.net/<path>/file1.parquet")

INTO TABLE table1

FORMAT AS "parquet"

)

WITH BROKER

(

"azure.adls2.oauth2_use_managed_identity" = "true",

"azure.adls2.oauth2_tenant_id" = "<service_principal_tenant_id>",

"azure.adls2.oauth2_client_id" = "<service_client_id>"

)

PROPERTIES

(

"timeout" = "3600"

);

For the detailed syntax and parameters for creating a Broker Load job, see BROKER LOAD.

For a typical example of loading data from Azure with table creation DDL, see Batch load data from Azure cloud storage.

Shared Key (access key of storage account)

Using Shared Key to access ADLS Gen2 works in the same way as using Shared Key to access Blob Storage. For information about using and obtaining the shared key, see Blobe Storage > Shared Key.

NOTICE

This authentication method now supports external catalog and Broker Load. Note that for external catalogs, the storage account you use for authentication must be the one used to store the data of your external data source.

Parameters

Configure the following parameters as credentials for accessing your ADLS Gen2.

| Parameter | Description |

|---|---|

| azure.adls2.storage_account | The name of your ADLS Gen2 storage account. |

| azure.adls2.shared_key | The shared key (access key) of your ADLS Gen2 storage account. |

Examples

External catalog

Create a Hive catalog named hive_catalog_azure:

CREATE EXTERNAL CATALOG hive_catalog_azure

PROPERTIES

(

"type" = "hive",

"hive.metastore.type" = "hive",

"hive.metastore.uris" = "thrift://xx.xx.xx.xx:9083",

"azure.adls2.storage_account" = "<storage_account_name>",

"azure.adls2.shared_key" = "<storage_account_shared_key>"

);

CelerData now supports Hive catalog, Iceberg catalog, Hudi catalog, and Delta Lake catalog. For the detailed syntax and parameters for creating these external catalogs, see Hive catalog, [Iceberg ../data_source/catalog/iceberg/iceberg_catalog.mdatalog.md), [Hudi ../data_source/catalog/hudi_catalog.mdatalog.md), and Delta Lake catalog.

Broker Load

Create a Broker Load job to load data from the data file file1.parquet to the destination table table1 which belongs to the database named mydatabase within the CelerData cluster:

LOAD LABEL mydatabase.label_gen2_key

(

DATA INFILE("abfs[s]://<container_name>@<storage_account_name>.dfs.core.windows.net/<path>/file1.parquet")

INTO TABLE table1

FORMAT AS "parquet"

)

WITH BROKER

(

"azure.adls2.storage_account" = "<storage_account_name>",

"azure.adls2.shared_key" = "<storage_account_shared_key>"

)

PROPERTIES

(

"timeout" = "3600"

);

For the detailed syntax and parameters for creating a Broker Load job, see BROKER LOAD.

For a typical example of loading data from Azure with table creation DDL, see Batch load data from Azure cloud storage.

Service Principal

Preparation

Before you can use this authentication method, make sure that you already have a service principal, or create a new one. For information about service principals in Azure, see Create a service principal in Azure.

Then, check that the service principal is assigned the required read and write permissions on your storage account. Follow these steps:

NOTICE

This authentication method now supports external catalog and Broker Load. Note that for external catalogs, the storage account you use for authentication must be the one used to store the data of your external data source, and the service principal must be the one used to deploy your CelerData cluster.

-

Sign in to the Microsoft Azure portal and navigate to the Storage accounts service page.

-

Find your ADLS Gen2 storage account and click its name.

-

In the left-side navigation pane, choose Access control (IAM).

-

On the Role assignments tab of the page that appears, check the existing role assignments.

-

If the required read and write permissions on the storage account have not been assigned to the service principal, click Add and choose Add role assignment. Then, add a proper role assignment:

a. On the Job function roles tab of the Add role assignment page, select a proper role assignment (for example, Storage Blob Data Owner, which allows for full access to the containers and data within your storage account) and click Next.

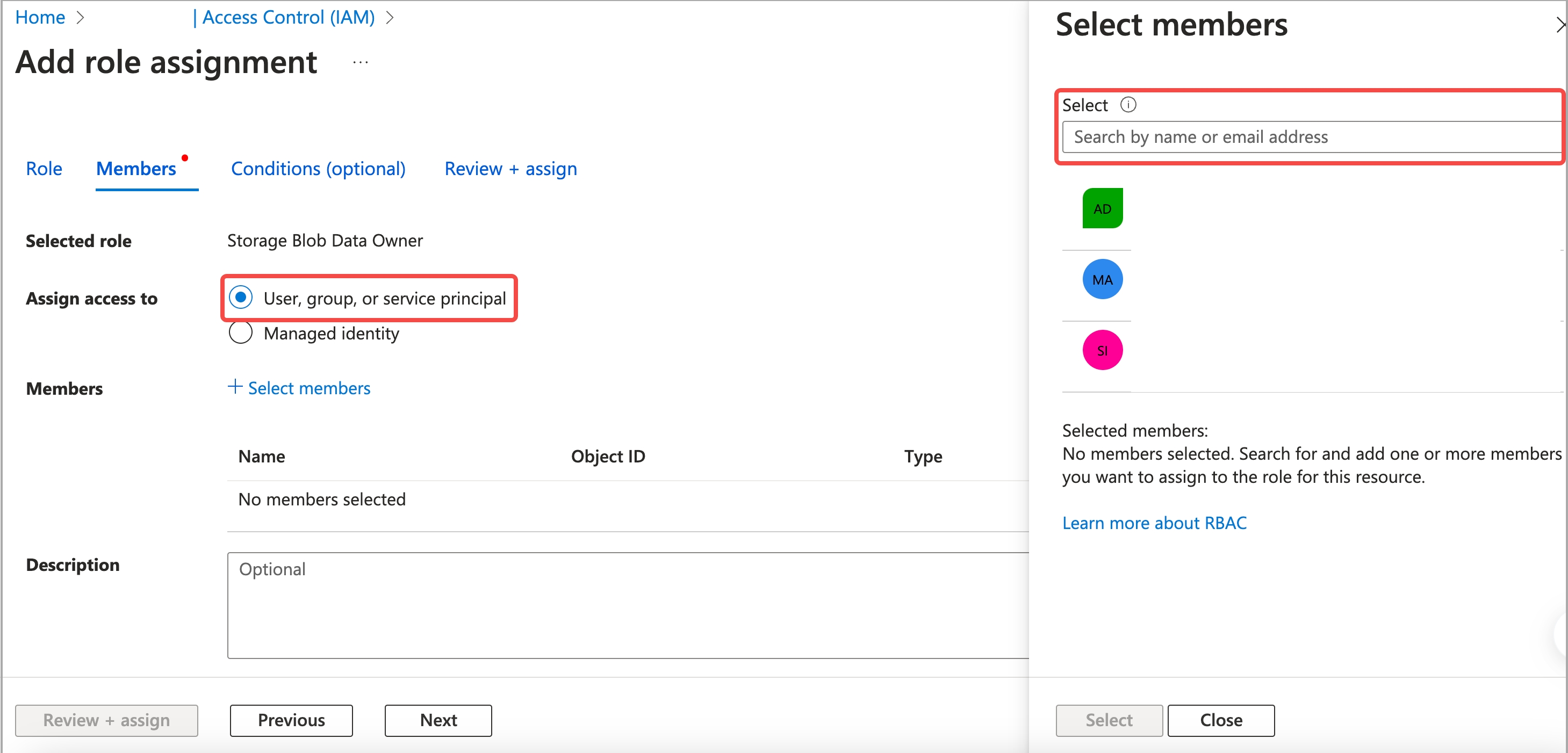

b. On the Members tab, select User, group, or service principal for Assign access to.

c. For Members, click Select members. In the right-side pane that appears, search for and select the application you have registered, then click Select.

d. Click Review + assign. On the Review + assign tab, review the information you have filled. Then, click Review + assign again to add the role assignment.

Parameters

Configure the following parameters as credentials for accessing your ADLS Gen2.

| Parameter | Description |

|---|---|

| azure.adls2.oauth2_client_id | The application (client) ID of the service principal. |

| azure.adls2.oauth2_client_secret | The value of the client secret of the service principal. |

| azure.adls2.oauth2_client_endpoint | The OAuth 2.0 token endpoint (v1) of the service principal. |

NOTE

Client secret values cannot be viewed, except for immediately after creation. After you create a client secret for the service principal, be sure to save the value of the client secret before you leave the page.

Follow these steps to obtain the application (client) ID and OAuth 2.0 token endpoint (v1) of the service principal:

-

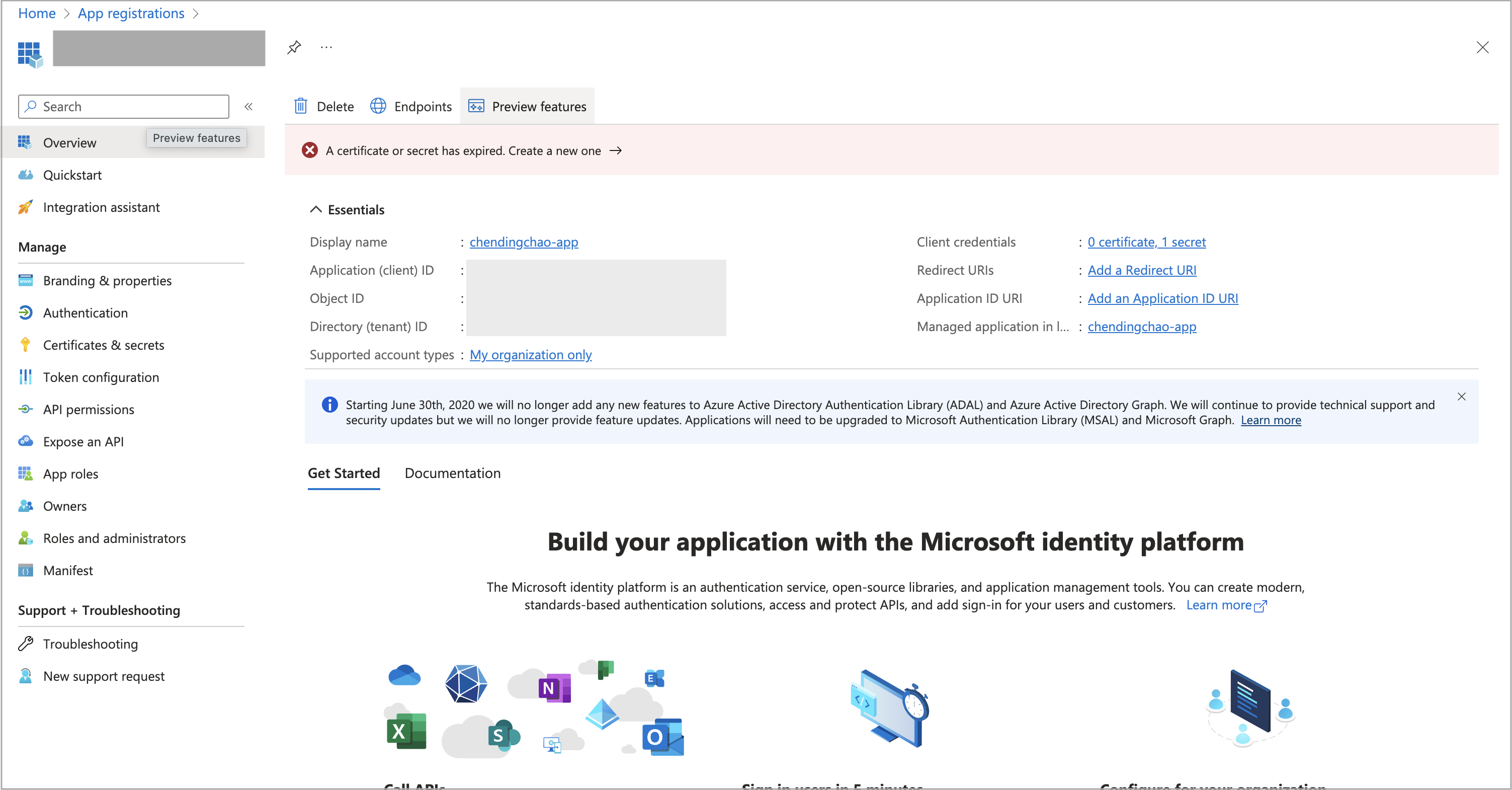

Sign in to the Microsoft Azure portal and navigate to the App registrations service page.

-

Find your registered application and click its name.

-

On the Overview page that is displayed, find the Application (client) ID and click the copy icon to copy it.

-

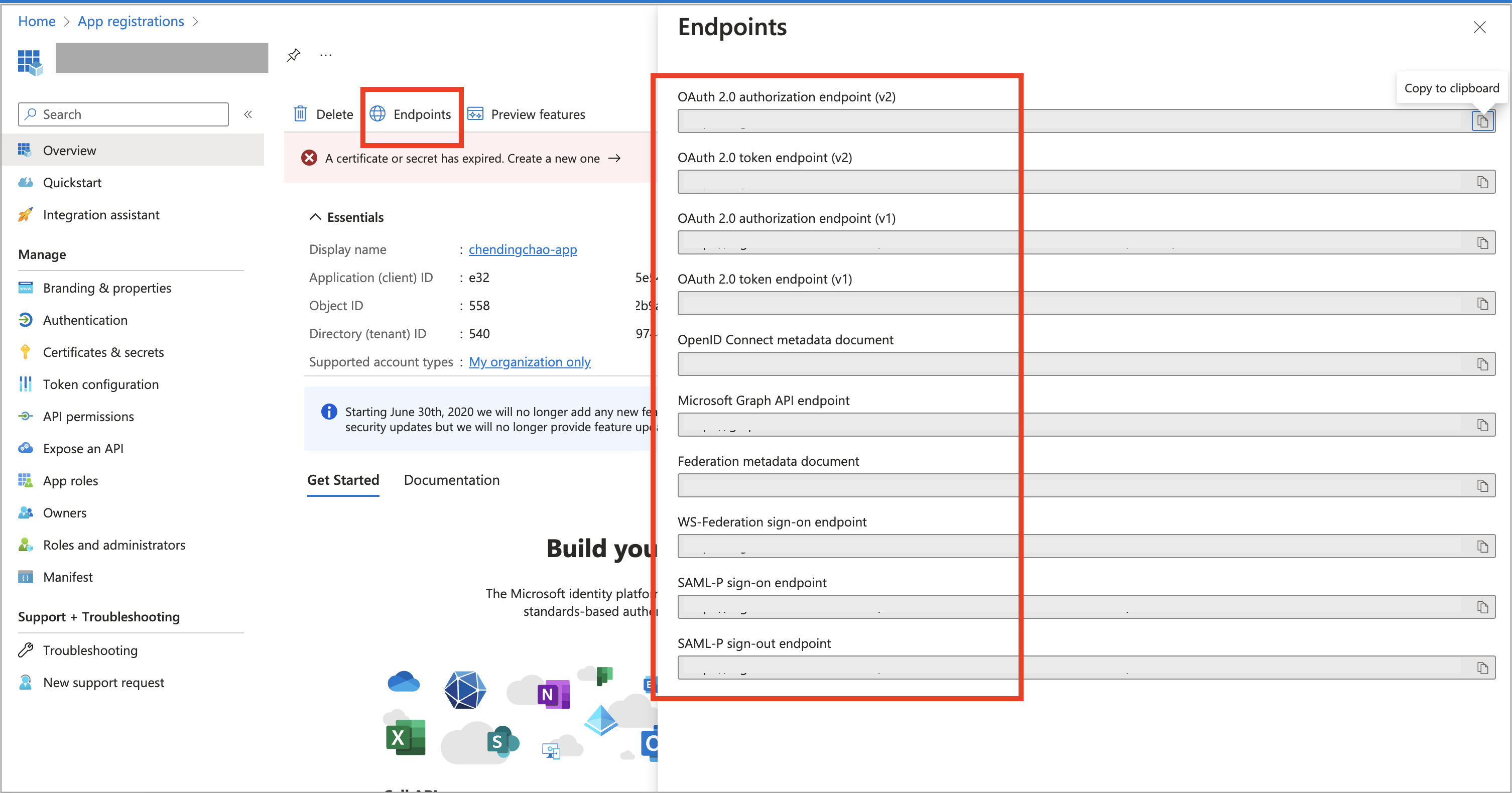

Click Endpoints in the upper area of the Overview page.

-

In the right-side Endpoints pane, find the OAuth 2.0 token endpoint (v1) and click the copy icon to copy it.

Examples

External catalog

Create a Hive catalog named hive_catalog_azure:

CREATE EXTERNAL CATALOG hive_catalog_azure

PROPERTIES

(

"type" = "hive",

"hive.metastore.type" = "hive",

"hive.metastore.uris" = "thrift://xx.xx.xx.xx:9083",

"azure.adls2.oauth2_client_id" = "<service_client_id>",

"azure.adls2.oauth2_client_secret" = "<service_principal_client_secret>",

"azure.adls2.oauth2_client_endpoint" = "<service_principal_client_endpoint>"

);

CelerData now supports Hive catalog, Iceberg catalog, Hudi catalog, and Delta Lake catalog. For the detailed syntax and parameters for creating these external catalogs, see Hive catalog, [Iceberg ../data_source/catalog/iceberg/iceberg_catalog.mdatalog.md), [Hudi ../data_source/catalog/hudi_catalog.mdatalog.md), and Delta Lake catalog.

Broker Load

Create a Broker Load job to load data from the data file file1.parquet to the destination table table1 which belongs to the database named mydatabase within the CelerData cluster:

LOAD LABEL test_db.label_gen2_principal

(

DATA INFILE("abfs[s]://<container_name>@<storage_account_name>.dfs.core.windows.net/<path>/file1.parquet")

INTO TABLE table1

FORMAT AS "parquet"

)

WITH BROKER

(

"azure.adls2.oauth2_client_id" = "<service_principle_client_id>",

"azure.adls2.oauth2_client_secret" = "<service_principal_client_secret>",

"azure.adls2.oauth2_client_endpoint" = "<service_principal_client_endpoint>"

)

PROPERTIES

(

"timeout" = "3600"

);

For the detailed syntax and parameters for creating a Broker Load job, see BROKER LOAD.

For a typical example of loading data from Azure with table creation DDL, see Batch load data from Azure cloud storage.

Azure Data Lake Storage Gen1

Azure Data Lake Storage Gen1 (ADLS Gen1) is currently no longer publicly accessible in Azure. But if you have data stored in ADLS Gen1, you can still access it by using the following authentication methods.

ADLS Gen1 supports the adl protocol. If you need to input a file path when you access ADLS Gen1, write the file path as adl://<data_lake_storage_gen1_name>.azuredatalakestore.net/<path>/<file_name>.

Managed Service Identity

Parameters

Configure the following parameters as credentials for accessing your ADLS Gen1.

| Parameter | Description |

|---|---|

| azure.adls1.use_managed_service_identity | Specifies whether to enable the Managed Service Identity authentication method. Set the value to true. |

Examples

External catalog

Create a Hive catalog named hive_catalog_azure:

CREATE EXTERNAL CATALOG hive_catalog_azure

PROPERTIES

(

"type" = "hive",

"hive.metastore.type" = "hive",

"hive.metastore.uris" = "thrift://xx.xx.xx.xx:9083",

"azure.adls1.use_managed_service_identity" = "true"

);

CelerData now supports Hive catalog, Iceberg catalog, Hudi catalog, and Delta Lake catalog. For the detailed syntax and parameters for creating these external catalogs, see Hive catalog, [Iceberg ../data_source/catalog/iceberg/iceberg_catalog.mdatalog.md), [Hudi ../data_source/catalog/hudi_catalog.mdatalog.md), and Delta Lake catalog.

Broker Load

Create a Broker Load job to load data from the data file file1.parquet to the destination table table1 which belongs to the database named mydatabase within the CelerData cluster:

LOAD LABEL mydatabase.label_gen1_identity

(

DATA INFILE("adl://<data_lake_storage_gen1_name>.azuredatalakestore.net/<path>/file1.parquet")

INTO TABLE table1

FORMAT AS "parquet"

)

WITH BROKER

(

"azure.adls1.use_managed_service_identity" = "true"

)

PROPERTIES

(

"timeout" = "3600"

);

For the detailed syntax and parameters for creating a Broker Load job, see BROKER LOAD.

For a typical example of loading data from Azure with table creation DDL, see Batch load data from Azure cloud storage.

Service Principal

Using Service Principal to access ADLS Gen1 works in the same way as using Service Principal to access ADLS Gen2. For information about using the service principal and obtaining its information, see Azure Data Lake Storage Gen2 > Service Principal.

NOTICE

This authentication method now supports external catalog and Broker Load. Note that for external catalogs, the storage account you use for authentication must be the one used to store the data of your external data source, and the service principal must be the one used to deploy your CelerData cluster.

Parameters

Configure the following parameters as credentials for accessing your ADLS Gen1.

| Parameter | Description |

|---|---|

| azure.adls1.oauth2_client_id | The application (client) ID of the service principal. |

| azure.adls1.oauth2_credential | The value of the client secret of the service principal. |

| azure.adls1.oauth2_endpoint | The OAuth 2.0 token endpoint (v1) of the service principal or application. |

Examples

External catalog

Create a Hive catalog named hive_catalog_azure:

CREATE EXTERNAL CATALOG hive_catalog_azure

PROPERTIES

(

"type" = "hive",

"hive.metastore.type" = "hive",

"hive.metastore.uris" = "thrift://xx.xx.xx.xx:9083",

"azure.adls1.oauth2_client_id" = "<application_client_id>",

"azure.adls1.oauth2_credential" = "<application_client_credential>",

"azure.adls1.oauth2_endpoint" = "<OAuth_2.0_authorization_endpoint_v2>"

);

CelerData now supports Hive catalog, Iceberg catalog, Hudi catalog, and Delta Lake catalog. For the detailed syntax and parameters for creating these external catalogs, see Hive catalog, [Iceberg ../data_source/catalog/iceberg/iceberg_catalog.mdatalog.md), [Hudi ../data_source/catalog/hudi_catalog.mdatalog.md), and Delta Lake catalog.

Broker Load

Create a Broker Load job to load data from the data file file1.parquet to the destination table table1 which belongs to the database named mydatabase within the CelerData cluster:

LOAD LABEL mydatabase.label_gen1_principal

(

DATA INFILE("adl://<data_lake_storage_gen1_name>.azuredatalakestore.net/<path>/file1.parquet")

INTO TABLE table1

FORMAT AS "parquet"

)

WITH BROKER

(

"azure.adls1.oauth2_client_id" = "<application_client_id>",

"azure.adls1.oauth2_credential" = "<application_client_credential>",

"azure.adls1.oauth2_endpoint" = "<OAuth_2.0_authorization_endpoint_v2>"

)

PROPERTIES

(

"timeout" = "3600"

);

For the detailed syntax and parameters for creating a Broker Load job, see BROKER LOAD.

For a typical example of loading data from Azure with table creation DDL, see Batch load data from Azure cloud storage.